When you give an intelligent system too much freedom, it learns fast but also makes mistakes fast. When you restrict it too tightly, it loses purpose and becomes little more than a script. The real skill lies in finding the balance where AI agents can act with autonomy yet never drift away from the business rules that define how an organization operates.

This isn’t a debate about whether machines should be creative or predictable. It’s about designing intelligent systems that can handle day-to-day decisions, adapt to changing inputs, and still follow the logical structure that keeps the business running smoothly.

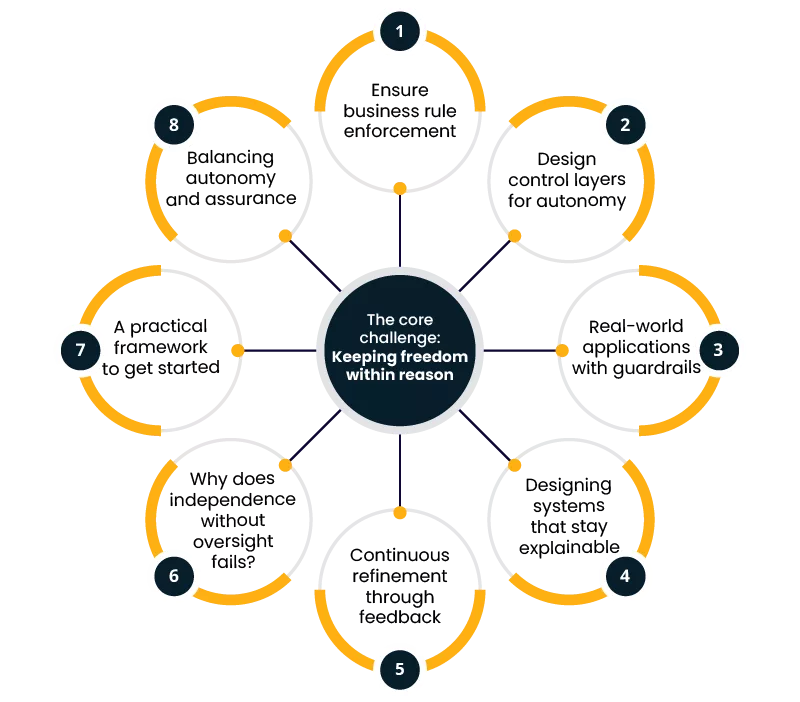

The core challenge: Keeping freedom within reason

Autonomous systems are not new. What’s new is their scale and complexity. Modern AI agents can read documents, interpret messages, make decisions, and even trigger workflows without constant human input. The problem begins when these systems make decisions that contradict defined business rules — pricing logic, compliance limits, or customer eligibility checks.

When an agent acts beyond those limits, the result is not innovation. It’s inconsistency. Inconsistent logic confuses teams, risks compliance violations, and damages customer trust. That’s why successful automation requires a strong foundation of logic enforcement, not as a constraint but as a guiding frame for safe independence.

1. Ensure business rule enforcement

Every organization has a set of written and unwritten laws that govern how it operates. These may exist in code, policy documents, or employee intuition. When introducing AI agents, the first step is converting those principles into a structured system of business rules that machines can understand and follow consistently.

Turning implicit rules into explicit logic

Most companies underestimate how many rules they already operate under. Think of a simple refund request. It might depend on product category, payment method, region, or transaction size. Humans understand these through training and context. An AI agent needs explicit logic.

To achieve reliable logic enforcement, organizations can start with three steps:

- Document the rules clearly. Each rule should state what it governs, when it applies, and what the valid outcomes are.

- Define dependencies. Some rules override others. Mapping these relationships prevents logical conflicts.

- Version everything. Business rules evolve. Tracking versions helps you understand which rule led to which outcome.

When rules are clearly defined, they can be translated into policy engines or declarative systems that AI agents query before taking any decision. This keeps outcomes consistent even as data or environments change.

Avoiding brittle rule design

Rules should protect intent, not micromanage behavior. Over-engineering logic can make systems inflexible. A good practice is to encode rules at the outcome level rather than at every micro-decision. For instance, instead of telling an agent exactly how to verify a customer’s identity, define what a “verified customer” means. Let the agent decide how to meet that condition within the permitted boundaries.

This approach respects autonomy while maintaining alignment. It’s a balance between autonomy vs control — giving AI systems the ability to decide how but not what to achieve.

Policy validation and runtime checks

Once the rules are formalized, continuous validation becomes essential. The agent’s actions should pass through automated pre-checks and post-checks:

- Pre-checks confirm that the action is permitted under the rule set.

- Post-checks confirm that the outcome meets defined expectations.

This dual-layer control ensures the agent doesn’t just act correctly but also learns to reason about correctness. Over time, these validation logs become a form of audit trail, valuable for both compliance and optimization.

2. Design control layers for autonomy

Autonomy is not a switch that’s turned on or off. It’s a set of layers — each defining how much decision-making power an AI agent gets at a specific stage. The goal is to ensure that independence doesn’t mean isolation from oversight.

Layer 1: Intent framing

Before taking any action, an agent should be required to declare its intent. This involves summarizing the goal, the relevant data it plans to use, and the decision pathway it expects to follow.

This step introduces transparency and acts as the first layer of defense. If the intent conflicts with existing business rules, the system can intervene before any real action is taken.

Layer 2: Planning gate

Once intent is validated, the plan itself needs to be examined. Planning gates are checkpoints where proposed decisions are compared with policy definitions. This is where logic enforcement becomes active.

The plan either proceeds as-is, gets adjusted, or is halted for manual review. These gates allow AI agents to make autonomous decisions but within verified bounds.

Layer 3: Execution filters

Even approved plans need live filters. These filters act during execution, monitoring each interaction or transaction. They verify identity, context, and purpose.

For example, a customer service agent could be free to issue refunds up to a specific limit but must request approval for higher amounts. These filters make the system responsive yet responsible — a practical approach to autonomy vs control.

Layer 4: Post-action review

Every decision taken by an AI system should be measurable and reversible. Post-action checks compare what the agent intended to do against what actually happened. If a deviation occurs, it’s flagged, corrected, and fed back into training data.

This continuous learning loop builds trust and prevents rule erosion over time.

Governance as a foundation

All these layers exist within a broader framework of AI governance — policies, ethics, and auditing that define how automation aligns with human and regulatory expectations Governance does not slow down progress; it preserves integrity.

Without it, even well-meaning automation can introduce silent failures that go unnoticed until they affect customers.

3. Real-world applications with guardrails

To make this practical, imagine three scenarios where controlled autonomy works in real operations.

Example 1: Automated procurement checks

Procurement teams often face hundreds of vendor requests each month. An AI agent can evaluate vendors, compare quotes, and flag discrepancies automatically.

The business rules here define what qualifies a vendor — for instance, compliance with tax ID, pricing within threshold, and payment terms under policy. The agent works freely within those limits but never finalizes a vendor if any rule fails validation.

When governance audits happen, every decision path is explainable through logs that show the exact rules applied.

Example 2: Financial approvals in expense management

Expense automation saves time but also opens the door for misuse. A well-designed system lets agents auto-approve claims within policy-defined limits.

When a claim exceeds budget thresholds or includes unapproved vendors, the system pauses and routes it for review. This keeps operations efficient while ensuring business rules are never compromised.

Example 3: AI in customer retention workflows

Customer churn prediction models are often followed by retention offers. If an AI agent directly generates offers, it must follow rules for discount caps, eligibility windows, and customer categories.

The logic ensures fairness, prevents revenue leakage, and keeps human teams in the loop for exceptions. Here again, autonomy doesn’t mean acting without supervision; it means acting intelligently within trusted limits.

4. Designing systems that stay explainable

One of the hidden strengths of AI agents lies in their traceability. Every decision, when logged with the rule that guided it, creates a transparent record. This not only supports AI governance but also builds user confidence.

When teams can see why a decision was made — not just what was decided — they can improve, adjust, and trust the automation process. Explanation bridges the gap between technical performance and human understanding.

To make this possible:

- Keep all business logic in readable formats.

- Record decision paths with timestamps and rule identifiers.

- Review audit logs regularly for anomalies or inconsistencies.

Transparency is not just a compliance checkbox. It is the main reason people continue trusting the system long after the novelty fades.

5. Continuous refinement through feedback

Rules and models must evolve together. Business rules can become outdated if markets, regulations, or customer behavior shift. Likewise, AI agents that learn new patterns might challenge older logic. The two must communicate.

A strong feedback loop helps detect when rules start conflicting with learned behavior. For example:

- If an agent repeatedly fails a certain rule, it might signal that the rule is too rigid or poorly defined.

- If an agent bypasses valid rules too often, governance mechanisms should trigger deeper audits.

The right approach is not to hard-code control forever, but to make adaptability safe. Systems should review both rules and outcomes periodically to maintain relevance without losing discipline.

6. Why does independence without oversight fails?

When systems act freely without enforced logic, the cost is not just errors — it’s erosion of trust.

Employees begin to doubt outputs, customers question consistency, and leadership hesitates to scale automation further.

Every broken rule chips away at the reliability of the entire AI initiative.

By contrast, when AI agents operate within tested, explainable boundaries, they extend what teams can do. They take on repetitive decisions, manage complexity, and respond faster, all while staying compliant with established business rules.

The right mix of logic enforcement and governance allows independence to serve structure, not escape it.

7. A practical framework to get started

For organizations designing or refining their own systems, here’s a short framework that helps maintain balance:

- List all business rules that affect a process.

- Prioritize them by importance: non-negotiable, conditional, and flexible.

- Build a rule repository that both humans and systems can query.

- Integrate validation gates at the intent, plan, and action stages.

- Audit regularly. Don’t rely on incident-based reviews.

- Train teams to understand how agents make decisions and where interventions occur.

This framework keeps autonomy functional rather than ornamental. It ensures that AI agents behave consistently across departments, products, and customer experiences.

8. Balancing autonomy and assurance

Autonomy and control do not compete; they coexist. When structured correctly, autonomy gives scale, and control provides safety. The relationship between autonomy vs control should not be seen as a trade-off but as a partnership.

A system designed this way reflects how real teams work — people think independently but within shared principles. The same should hold true for intelligent systems that represent your brand in digital environments.

Closing thoughts

Independence makes AI useful. Discipline makes it reliable. The future of intelligent automation lies not in removing rules but in building better ways to follow them. When AI agents can act on their own and still respect the business rules that define organizational order, automation stops being experimental and becomes part of how modern companies operate safely at scale. Well-designed systems don’t just act smart; they act correctly. That’s what separates real intelligence from reckless automation.