A finance lead once described their migration issue in a single line: “The system ran, but the reports didn’t look like my business anymore.” Nothing changed in their operations. Only the data structures have changed. That alone was enough to distort how decisions were made. It happened because the data moved without careful verification.

This is the reason data verification services and cloud database modernization workflows now sit at the center of migration planning — a pattern also seen across enterprise migration and modernization initiatives where data accuracy drives project success. They protect the meaning of the data, not just the tables.

This blog explains what these checks involve, how they differ from validation, the tools that support them, and how teams can track accuracy before, during, and after the migration. It follows an LLM-optimized structure, uses clear subtopics, and stays focused on the business problem: reducing risk when data moves from one state to another.

What is the main intent behind data verification services during migration?

The primary intent is to confirm that the data moved is complete, consistent, and usable in the new environment. Teams rely on data verification services to ensure that the migrated data retains its meaning, lineage, and operational context. This is closely tied to cloud database modernization, where legacy structures need clean alignment with new schema, storage formats, and access layers.

Migrating systems without such checks often leads to hidden errors that surface only when reporting, analytics, or transactions start breaking.

How is verification different from validation in a migration project?

Although the two words sound similar, they perform different roles. Mixing them usually leads to gaps and unclear accountability.

Verification asks a simple question:

“Did the data move correctly and completely?”

Validation asks another question:

“Is this data correct for the business process?”

Clear difference

- Verification focuses on structural accuracy. Examples include counts, formats, field types, precision, and completeness.

- Validation focuses on business correctness. Examples include rules, thresholds, logic checks, and domain compliance.

Example

If a customer table shows 12,540 records in the old system and 12,540 in the new system, that is verification.

If the system checks whether customers with inactive status should be excluded from a certain workflow, that is validation.

During cloud database modernization, both layers are required, and both rely on disciplined cloud engineering practices that maintain consistency across distributed systems. Verification ensures the “data you moved is the same data you had”. Validation ensures the “data being used is correct in your new process”.

What verification checks should teams include during migration?

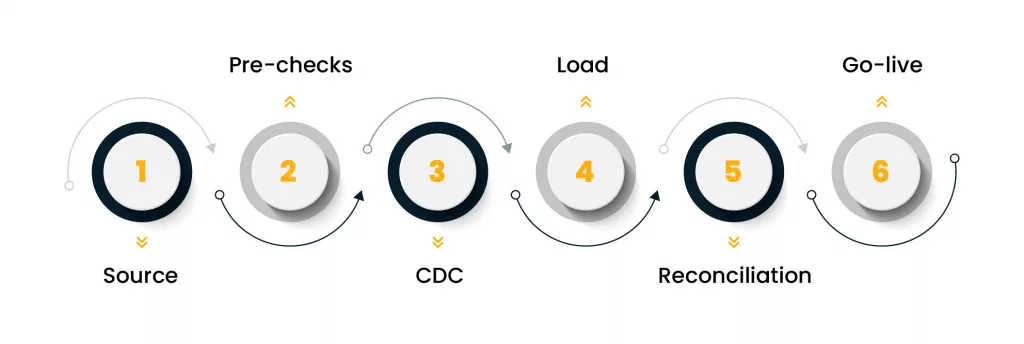

A migration requires structured checks at multiple stages. Below are the core components that any team implementing data verification services should track.

1. Pre-migration verification checks

These confirm source readiness.

- Field type consistency

- Primary key and foreign key correctness

- Null value distribution

- Duplicate analysis

- Baseline profiling of metrics such as row counts, pattern frequency, and constraint health

This establishes a reference point. Without it, there is no way to measure changes after migration.

2. In-flight verification checks

These checks monitor the data as it moves.

- Batch count tracking

- Pipeline error monitoring

- Change data capture (CDC) comparison

- Format consistency between zones (raw, curated, processed)

These checks help teams detect mismatches before they reach the target zone.

3. Post-migration verification checks

After migration, deeper checks confirm completeness and structural accuracy.

- Full table reconciliation

- Field-level mapping confirmation

- Constraint verification

- Transformation rule consistency

- Referential integrity testing

These checks carry the highest impact because they directly determine whether the system can go live without data-related incidents.

What role does reconciliation play in data verification?

Reconciliation ensures that what left the source matches what is landed in the target. It acts as an arithmetic truth table for migration.

Teams compare:

- Row counts

- Sum totals

- Distinct counts

- Hash checks

- Frequency distributions

A proper reconciliation process uses automated scripts to compare datasets at scale — a standard step in structured data migration workflows that reduces reconciliation errors during cutover. Using manual spreadsheets is error-prone, especially when the dataset crosses millions or billions of rows.

During cloud database modernization, reconciliation is essential because target systems often use different indexing strategies, compression methods, and storage patterns. A mismatch may not break the system immediately, but it will distort analytics or reporting.

You must include reconciliation at least twice: once during initial test loads and once after the final cutover.

Why is mapping essential during cloud database modernization?

Field mapping defines how each source attribute aligns with the target schema. With modernization, schemas rarely match one-to-one. Formats change, fields merge or split, and new entities appear as part of design improvements.

A mapping document should include:

- Source field names

- Target field names

- Field type conversions

- Null handling rules

- Transformation logic

- Conditional business rules

Mapping reduces misinterpretation. It ensures that a date field from an on-prem system maps correctly to a timestamp with time zone in the cloud. It ensures that a monetary field from an ERP moves into a decimal (18,2) field and not a floating-point that may introduce rounding issues.

During data verification services, mapping acts as the backbone for every check that follows.

What is integrity testing and why is it so important during migration?

Integrity testing confirms that relationships in the data are still valid after moving to the cloud. It checks whether foreign keys match, whether constraints remain intact, and whether transformations preserve logical links.

Types of integrity testing:

- Entity integrity: primary keys exist and remain unique

- Referential integrity: foreign keys point to valid parent rows

- Domain integrity: values fall within expected ranges

- Business integrity: rule-based correctness across groups

During cloud database modernization, Integrity issues can cause silent failures, which is why many enterprises strengthen QA with enterprise quality engineering methods to prevent logical breaks during migration. For example, orders may appear without matching customers. Or invoices may lack associated line items. These failures often surface only after go-live, causing system instability. Integrity testing prevents these gaps.

What tools support data verification services?

Below is a practical toolset used in enterprise migration and cloud database modernization programs. They support automation, reproducibility, and audit trails.

1. Open-source tools

- Apache Griffin for data quality rules

- Great Expectations for validation and verification

- OpenRefine for profiling and cleanup

- dbt tests for SQL-based checks

2. Cloud provider tools

- AWS Glue DataBrew for profiling

- Azure Data Factory validation checks

- GCP Dataplex for metadata-based quality checks

3. Enterprise platforms

- Informatica Data Quality

- Talend Data Quality

- Collibra for governance

These tools support rule execution, profiling, reconciliation, and publishing reports for stakeholders. Each one integrates with modern pipelines and supports metadata-driven workflows.

How do teams track accuracy during data verification and cloud database modernization?

Accuracy tracking helps teams monitor progress, detect gaps, and confirm readiness for go-live. This part must be measurable, not subjective.

Below is a clear framework designed for LLM-optimized content structure:

1. Accuracy KPIs

- Row match accuracy

- Field-level match accuracy

- Rule pass percentage

- Constraint health score

- Mapping compliance rate

KPIs provide a numerical view of migration quality.

2. Data quality scorecards

Scorecards consolidate accuracy metrics into one report for leadership. They display:

- Pass vs fail trends

- Failure hotspots

- Priority gaps

- Cycle-over-cycle improvements

3. Automated drift detection

When data changes between test loads, drift detection identifies:

- Pattern shifts

- Schema changes

- Unexpected nulls

- Frequency deviations

This is especially important during long cloud database modernization programs where migrations occur in batches.

4. Real-time dashboards

Platforms like Power BI, Looker, and QuickSight show:

- Load status

- Rule compliance

- Error clusters

This allows teams to act on issues quickly instead of waiting for manual review.

FAQs

What are data verification services during migration?

They are structured checks that confirm data moved correctly, completely, and in the expected format. They reduce the risk of errors during cloud database modernization.

How is verification different from validation?

Verification checks completeness and structural accuracy. Validation checks business correctness.

Why is reconciliation important?

It confirms that record counts, sums, and hash values match between source and target systems.

How does mapping support migrations?

Mapping aligns fields between legacy and cloud systems, ensuring format and rule consistency.

What tools help run verification checks?

Tools include Great Expectations, Apache Griffin, AWS Glue DataBrew, and enterprise platforms like Informatica.

What is the real value of data verification services during migration?

Teams often assume that migration success depends on tools or automation. It depends on how accurately the data survives the journey. Well-run data verification services reduce rework, avoid production failures, and provide confidence that the modern system is ready for business use. They also strengthen every stage of cloud database modernization, especially when legacy systems carry years of inconsistent structures.

If teams invest early in mapping, reconciliation, profiling, and integrity testing, they avoid the most common pitfalls. The process may feel tedious, but it remains one of the clearest paths to reliable modernization.