If your release train keeps missing stations, the problem is not speed, it is the feedback loop.

Modern enterprises run on complex systems that never sit still. Releases land weekly or daily. Teams work across time zones, vendors, and stacks. In this setting, enterprise applications testing services are not just about catching bugs. They are about shaping a repeatable way to ship with confidence.

This guide sets clear expectations. It gives you a practical view of scope, outcomes, methods, and the artifacts you should receive from a serious partner. No fluff, just a field-tested playbook.

Who needs these services and when?

Look for a partner when any of the following is true:

- Critical projects pile up behind slow test cycles.

- Incidents keep slipping through to production.

- You cannot trace features to tests and risks.

- Environments and data are constantly out of sync.

- Tooling exists, but results are noisy or stale.

- You want consistent quality across ERP, CRM, mobile, data, and custom apps.

If two or more of these hit home, enterprise applications testing services will pay back quickly.

What “good” looks like?

A strong engagement sets measurable, near-term outcomes. Expect improvements like:

- Release readiness scored for every drop, not just a gut feel.

- Defect leakage down 30 to 60 percent within a quarter.

- Mean time to detect issues cut by half.

- Automated smoke and health checks running in build and in prod.

- Traceability from requirement to test to risk to control.

- Clear coverage for priority user journeys and core APIs.

You are not buying heroics. You are buying a system that keeps working when people are busy or teams change. That is the core value of enterprise applications testing services.

Service scope you should expect

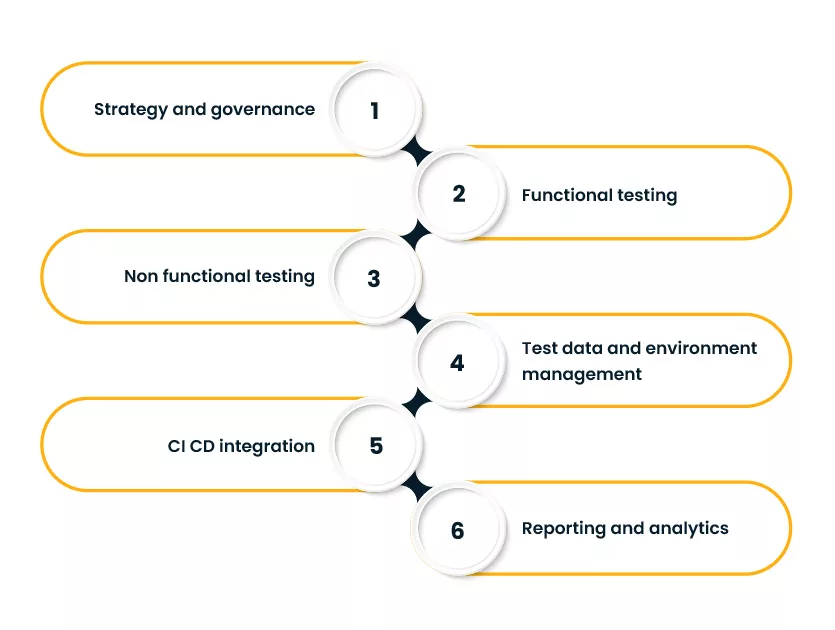

A complete program covers functional and non-functional quality. Look for these workstreams:

- Strategy and governance

- Quality objectives mapped to business risk

- Test policy, roles, RACI, and a living service catalog

- Vendor and tool rationalization

- Functional testing

- Unit, component, API, UI, end to end

- Data validation across systems of record and analytics

- Integration and contract testing for third party and internal services

- Non functional testing

- Performance, reliability, capacity, failover

- Accessibility and usability

- Privacy, compliance, and basic security checks

- Test data and environment management

- Synthetic data design, masking, and subsetting

- Environment topology map and booking rules

- CI CD integration

- Pipeline gates for critical flows

- Flaky test control and health scoring

- Reporting and analytics

- Release confidence index, DRE, change failure rate, and MTTR

- Business facing dashboards by product and portfolio

These blocks form the base of enterprise applications testing services across industries.

How is the work delivered?

Expect a steady rhythm. A simple model works well:

- Days 0 to 14. Discovery and baselining

- Risk workshops, architecture walk throughs, and environment review

- Inventory of tests, data, and CI jobs

- Initial health score with a one page summary

- Days 15 to 45. Stabilize the flow

- Set up pipeline smoke tests for top user journeys and APIs

- Create a shared test data pack and a booking rule for environments

- Introduce triage and a daily quality standup

- Days 46 to 90. Scale with purpose

- Expand coverage to priority modules and integrations

- Add performance and basic security checks into CI

- Publish a release confidence dashboard to product owners

- Beyond 90 days. Continuous improvement

- Tune coverage based on risk and usage analytics

- Mature failure analysis and defect prevention

- Quarterly service review with goals and budgets

The test pyramid, adapted for enterprise systems

The usual pyramid needs a layer for contracts and data. Here is a simple view you can share with teams.

UI flows

End to end checks

API and contract tests

Component and integration tests

Data validation and rules

Unit testsThe goal is balance. Teams often chase UI automation first, then stall on maintenance. A better path begins with API, contract, and component tests, with targeted UI coverage where it matters. That balance should be explicit in any enterprise applications testing services proposal.

The delivery loop

A clear loop keeps risk in check and releases moving.

Business goals → Risk profile → Test strategy → Environments → Data packs → Automation → Reporting → ActionEvery arrow should map to a named artifact. Without those, work drifts.

Artifacts you should receive

You are paying for more than execution. Ask for these deliverables:

- Quality strategy and risk register

- Test plan per product with entry and exit rules

- Traceability matrix from requirement to test and control

- Test data blueprint with masking rules and seed packs

- Environment topology and booking calendar

- Automation architecture with a repo map and coding standards

- Pipeline gate definitions and a flaky test dashboard

- Performance test scripts and a capacity forecast

- Security checklist and a remediation playbook

- Release confidence dashboard with definitions and thresholds

If a partner in enterprise applications testing services cannot provide these, keep looking.

Tooling that fits the job

Tool names change, principles do not. Here is a practical checklist to guide choices:

- Source control and CI. Branch strategy, build isolation, artifact retention

- Test runners. Language fit, parallelism, retry policy, and assertion clarity

- API testing. Contract schemas, mock servers, and environment variables

- UI testing. Stable locators, visual checks where they add value, and device coverage

- Performance. Workload models tied to traffic and business events

- Security. SAST and DAST in CI with clear severity rules and SLAs

- Data. Masking, generation, and referential integrity for end to end flows

- Reporting. Drill downs by product, release, test suite, and failure reason

Tools exist to serve the flow, not the other way around.

Security, reliability, and compliance

Quality is broader than pass or fail. A good partner weaves privacy, reliability, and controls into daily work. Expect to see:

- Lightweight threat modeling during grooming

- Abuse case tests that mirror fraud and misuse patterns

- Performance budgets defined per critical journey

- Resilience checks for timeouts, retries, and fallbacks

- Privacy impact assessments aligned with masking rules

Teams that connect security and automation at the pipeline level cut cycle time and risk. Your program should state how security and automation work together, both in build and in production monitoring.

Reporting that drives decisions

Dashboards should answer three questions fast:

- Can we ship today

- What will break if we do nothing

- Where will investment cut risk the most

A solid reporting stack includes:

- Release confidence index with red and green gates

- DRE trend and leakage by module

- Test coverage heatmap for flows and APIs

- Flaky test tracker with owner and ETA

- Capacity and performance trend by key journeys

Senior leaders want patterns, not noise. Product teams want root causes and owners. Your enterprise applications testing services vendor should serve both views without double work.

A practical way to measure value

Use a simple scorecard each month:

- Change failure rate

- DRE by product and by severity

- Time from merge to deploy

- Mean time to detect and to resolve

- Coverage for top journeys and APIs

- Ratio of automated to manual checks for each layer

Tie the scorecard to goals that matter to the business. Fewer incidents, faster projects, happy users.

How to choose a partner?

Do a short bake off. Use this checklist of questions:

- Show us a sample repo with test structure and coding standards

- Walk through your approach to data masking and synthetic data

- How do you stabilize flaky tests and what is your target rate

- What is your policy for test ownership across squads

- Share a real release confidence dashboard with definitions

- Explain your approach to performance modeling and budgets

- Describe how you integrate privacy checks into grooming

- Provide a sample RACI and service catalog

You want practical proofs, not sales slides. The right fit for enterprise software testing services will speak in artifacts and numbers.

Pitfalls to avoid

- Starting with UI automation only. It feels visible, then it breaks often and slows teams.

- No ownership model. Tests without owners decay fast.

- Unstable environments. Results become random and trust erodes.

- Untamed flakiness. Retries hide real issues and burn time.

- Overlooking test data. Realistic data is the backbone of end to end checks.

A steady partner in enterprise applications testing services tackles these first.

The 30 60 90 day outcome map

Days 0 to 30

- Health baseline and risk map

- Pipeline smoke for two critical user journeys and one core API

- Environment booking rule and seed data pack

- First release confidence report shared with product leads

Days 31 to 60

- API and contract coverage for high risk services

- Component tests for two core modules

- Performance smoke in CI with budgets for key journeys

- Privacy checklist wired into grooming

Days 61 to 90

- UI coverage for top business flows on desktop and mobile

- Flaky test dashboard in place with owners and targets

- Capacity model for the next peak season

- Quarterly service review with goals for the next cycle

Where automation fits best?

Automation works when it mirrors how the system is used and how it fails. Think in layers.

- Unit and component for fast feedback

- API and contract for integrations and versioning

- UI for critical user journeys only

- Performance for capacity and resilience

- Production checks for availability and smoke

Ground rules:

- Tests must be deterministic and independent

- Suites must run in parallel and finish fast

- Failures must point to owners and logs

- Health of tests matters as much as count of tests

This is the heart of enterprise applications testing services done well.

The role of people and process

Tools help, but people and process set the pace. Make it clear who owns what.

- Product leaders own risk priorities

- Engineering owns code quality and fast feedback

- QA owns the system for tests, data, environments, and reporting

- Security partners with QA on threat modeling and controls

- Ops keeps environments stable and observable

Keep meetings short. Use a daily quality standup with a tight agenda. Use a weekly review for trends and blockers.

Budget and planning tips

- Start with the top three user journeys. Expand only when green for two sprints.

- Invest in data and environments early. It saves months later.

- Keep a 70 to 30 split for API plus component tests to UI tests for most apps.

- Tie performance work to real traffic and seasonality.

- Make a small reserve for tool changes and spikes.

Treat these as planning defaults, not rules carved in stone.

Final word

Testing at enterprise scale is about flow, not theater. Set clear outcomes, ask for the right artifacts, and keep the loop tight from risk to action. If you need a starting point, run a two week baseline and a 90 day plan, then expand by business priority. The right partner in enterprise software testing services will meet you there with proofs, not promises. Teams that connect security and automation across build and runtime see faster releases and fewer surprises. That is what you can expect when enterprise applications testing services are done with craft and care.