| Agentic AI architecture |

“Can I look up your calendar, check traffic, and move the meeting for you?”

A system that asks this is not a simple pipeline. It plans, decides, acts, and then checks if the world actually changed. That loop is the core reason agentic systems need a different architecture than the classic request in, response out stack.

This post explains what changes, why it matters, and how to put it into practice on AWS. I will use Agentic AI architecture with AWS Transform as the thread that ties design choices to real services and reliable delivery.

The short version

Traditional workflows are linear. They assume inputs are stable and that one shot inference is enough. But as we’ve seen in cloud engineering vs. cloud computing, linear designs often fail to capture the complexity and adaptability required in modern systems. Agentic systems operate in loops. They plan tools, act on external systems, observe side effects, and adjust the plan. That means new primitives: memory, tools, multi-step planning, policies, and tight feedback. It also means new risks: runaway loops, cost spikes, stale memory, and silent failures. The architecture must reflect these needs.

What changes with agentic systems?

Classic ML stacks optimize for single inference and batch scoring. Agentic systems introduce a closed loop:

- Sense the goal and context.

- Plan a sequence of actions using available tools.

- Act with tool calls that change real systems.

- Observe outcomes and update state.

- Revise the plan or stop.

This loop depends on components that do not exist in older designs:

- A tooling layer with strict permissions and usage contracts

- Short and long term memory that the agent can read and write

- Policy guardrails that gate plans and actions

- Orchestration that can branch, retry, and cancel

- Observability that spans tokens, tools, cost, and outcomes

AI workflow design vs the agent loop

Traditional AI workflow design draws boxes for data intake, preprocessing, model inference, and postprocessing. The agent loop uses the same building blocks but shifts emphasis:

- State is first class. The loop needs a shared state store for goals, plans, intermediate results, and outcomes.

- Tools are products. Each tool must have a contract, quota, and permission scope.

- Policies are online. Safety is not an offline review. It is an always-on gate at plan time and act time.

- Observability is deep. You monitor prompts, tool calls, cost, latency, success criteria, and rollback steps.

These are the architectural differences that influence every choice you make on AWS.

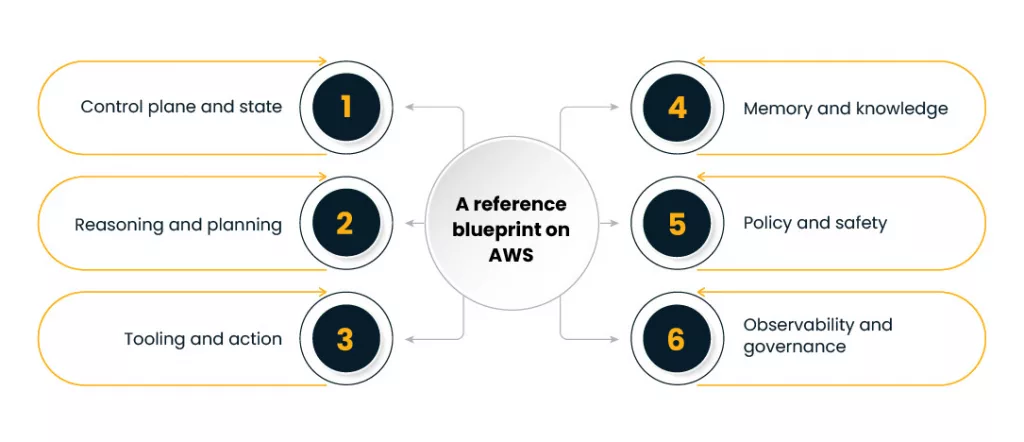

A reference blueprint on AWS

Below is a pragmatic, AWS-native blueprint you can adapt. It grounds the ideas above and keeps day two operations in focus.

1) Control plane and state

- State store: Amazon DynamoDB for fine-grained, low-latency state. Separate tables for session state, plans, and tool results. Use TTL for cleanup.

- Event fabric: Amazon EventBridge routes agent events like PlanCreated, ToolCalled, ObservationRecorded, LoopTerminated.

- Orchestration: AWS Step Functions Express for the outer loop. It handles retries, branches, and timeouts without custom glue.

2) Reasoning and planning

- Model access: Amazon Bedrock for foundation models with Bedrock Guardrails to enforce policies on inputs and outputs.

- Planner: A Lambda function invokes Bedrock with a structured prompt and a schema that produces a tool plan. You validate the plan against policies before any action runs.

- Prompt assets: Store prompt templates in AWS AppConfig. Version, stage, and roll back like any config.

3) Tooling and action

- Tool registry: DynamoDB table that lists tools, IAM roles, quotas, and input schemas.

- Tool adapters: Each tool is a Lambda, container on ECS Fargate, or a service behind API Gateway. A tool has one job, a strict schema, and an IAM role with the least permission required.

- Secrets: AWS Secrets Manager for API keys and OAuth tokens with rotation.

- Queues: Amazon SQS for backpressure on high-latency tools.

4) Memory and knowledge

- Short-term context: DynamoDB items keyed by session turn.

- Long-term memory:

- Vector store on Amazon OpenSearch Serverless for retrieval.

- Graph memory on Amazon Neptune for entities, relations, and tool outcomes.

- Cold transcripts in Amazon S3 with Lake Formation for fine-grained access.

- Feature access: Amazon Athena to query S3 memory snapshots for analytics.

5) Policy and safety

- Guardrails: Bedrock Guardrails for input and output filters.

- Permissions: IAM permission boundaries for each tool. Use service control policies on production accounts.

- Network: Private VPC endpoints for model calls and data stores to avoid public internet.

- Audit: AWS CloudTrail and S3 object locks for immutable logs.

- Rate governors: Application Auto Scaling and quota checks in the Tool Hub to prevent loops from exhausting budgets.

6) Observability and governance

- Tracing: AWS X-Ray across the full loop including tool adapters.

- Metrics: CloudWatch metrics for tokens, cost, tool latency, and plan success rate.

- Logs: Structured logs with correlation IDs for each loop turn.

- Dashboards: CloudWatch dashboards plus a simple “runbook” panel that shows last plan, tools used, policies triggered, and cost per session.

This is Agentic AI architecture with AWS Transform in practice. You are not wiring raw prompts to endpoints. You are building a system with state, policies, and contracts.

Designing the agent loop step by step

Step 1: Intent and scoping

- Parse goal and constraints.

- Attach identity and entitlements.

- Decide if the loop is allowed to act. Some sessions are read-only.

Why it matters: the loop can only be safe if it knows who is asking, what it may touch, and when to stop.

Step 2: Plan with a contract

- Generate a plan of tools and checks using Bedrock.

- Validate each step against policy rules.

- Store the plan in DynamoDB with a version and a cost estimate.

Pro tip: make “tool selection” a supervised task with a strict schema. Keep a tool catalog small and high quality.

Step 3: Execute tools

- Call tools through the Tool Hub, never directly from the planner.

- Enforce timeouts.

- Capture structured results plus side-channel logs.

Step 4: Observe and decide

- Compare expected to actual results.

- If the plan deviates, revise or stop.

- Write observations to memory and emit events.

Step 5: Finish and report

- Produce a human-readable summary of steps and outcomes.

- Include links to logs, tickets, or records changed.

This pattern is repeatable. It scales across use cases without rewriting glue.

A concrete example: meeting assistant that can reschedule

Goal: “Move my 2 pm sync to tomorrow morning if all attendees can make 30 minutes.”

Walkthrough

- Ingress Lambda authenticates the user. It checks that the session is allowed to modify calendars.

- Planner builds a three-step plan: read calendars, find a common slot, update the event. Policies verify that only the user’s domain calendars are in scope.

- Tool A queries calendar availability. Tool B proposes slots. Tool C updates the event using a service account.

- Observation step confirms the event exists in the new slot and that invites were sent.

- Memory stores the outcome and a short summary for future context.

This is where Agentic AI architecture with AWS Transform shines. The plan is explicit. Tools are contained. Logs are complete. Rollback is possible.

Risks and how to handle them

- Runaway loops. Put a hard cap on turns per session and total tools per plan.

- Hallucinated tools. The Tool Hub rejects calls to unknown tools. Keep the catalog in DynamoDB and fetch it fresh each loop.

- Data leakage. Guardrails, IAM scopes, and private VPC endpoints contain flows.

- Latency spikes. Use SQS between high-latency tools and the orchestrator. Surface partial results back to the user when helpful.

- Cost drift. Add a preflight estimate and a cost ceiling per session. Cancel when the loop crosses the ceiling.

These controls keep autonomous agents reliable in production without dulling their value.

How this differs from classic pipelines?

Classic systems:

- Fixed order of steps

- Single inference per request

- Minimal state beyond a request ID

- Offline reviews for safety

- Shallow logs

Agentic systems:

- Dynamic plans with branches

- Multiple tool calls per request

- Rich shared state across steps

- Online guardrails gating plans and actions

- Deep, correlated traces of every turn

These architectural differences explain why tried and tested batch stacks fall short once actions enter the picture.

Adoption path that works

Start small but keep the blueprint intact. Your target is reliability, not demos.

- One use case. Pick a contained job that writes to a system you own.

- One planner. Keep the planning prompt short and constrained.

- Three tools. Calendar read, slot finder, event writer is a good pattern to copy.

- One memory. Use DynamoDB at first for all state. Bring in vectors and graph later.

- One dashboard. Show plan, tools, policies, and cost per session.

- One rulebook. Document policies in plain language and mirror them in code.

As you scale out, bring in vectors, graph memory, and richer policies. You will notice you are building the same bones again and again. That is good.

This is still Agentic AI architecture with AWS Transform, just stretched across more use cases and teams.

Practices that raise quality fast

- Define a tool contract for each adapter: schema, IAM role, timeout, idempotency notes, and example inputs.

- Separate control plane from data plane. The planner and policies never hold secrets for downstream systems.

- Treat prompts as code. Version, review, and roll back via AppConfig.

- Write postmortems for failed loops the same way you do for outages.

- Add shadow mode. Run the planner but block actions while you study plans and costs.

These simple habits keep autonomous agents predictable in busy environments.

Where AWS services fit cleanly?

- Reasoning: Bedrock with Guardrails

- Orchestration: Step Functions Express

- Events: EventBridge

- State: DynamoDB

- Tools: Lambda or ECS behind API Gateway, SQS for backpressure

- Memory: OpenSearch Serverless, Neptune, S3

- Security: IAM permission boundaries, Secrets Manager, private endpoints

- Observability: CloudWatch, X-Ray, CloudTrail, Athena for log queries

- Governance: Lake Formation, service control policies

Tie these together with clear boundaries and you get a platform that is flexible and safe. You can evolve models and tools without breaking the loop.

This platform view is a practical lens on Agentic AI architecture with AWS Transform that teams can adopt one slice at a time.

Bringing it together

Think of classic AI workflow design as a conveyor belt. Useful, predictable, and easy to audit. think of agents as a team member who can plan, act, and learn. The system must manage that freedom with contracts, policies, and feedback. That is why the design tilts toward state, tools, policy gates, and observability.

To recap the main points:

- Agents work in loops. Loops demand state, plans, tools, and policies.

- Tools are products. Give them contracts, quotas, and narrow permissions.

- Memory is layered. Short term in DynamoDB, long term in vectors and graphs.

- Safety is online. Guardrails, IAM, and audits operate for every turn.

- Observability is rich. Trace tokens, tools, cost, and outcomes.

- Start small but keep the platform shape intact from day one.

If you adopt this mindset, Agentic AI architecture with AWS Transform feels natural. You are not wiring a one-off demo. You are building a dependable system that plans, acts, and learns without surprises.

One-page checklist for your first agent

- Clear goal and limits

- Planner with a typed plan schema

- Tool Hub with a catalog and IAM scopes

- DynamoDB tables for session, plan, and results

- Guardrails and policy checks at plan and act steps

- EventBridge events for each turn

- CloudWatch dashboard for cost, latency, and success rate

- Runbook with rollback steps

Follow this, and the architectural differences will work for you rather than fight you.

Final note

Agentic systems are not just bigger LLMs. They are different systems with new moving parts that touch the real world. Design for that reality and the results will hold up under real traffic and real users. Done right, Agentic AI architecture with AWS Transform becomes a repeatable pattern your team can apply across domains without starting from scratch each time.