The fastest way to make AI useful is to treat it like a team. Give each agent a clear job, pass small signals between them, and keep the system honest with events.

This guide shows how to design multi-agent workflows with AWS Bedrock for real work. We will define collaboration patterns, map them to Bedrock features, and use events to drive timing and reliability. Most of this is battle tested on production-style problems where traceability and cost control matter.

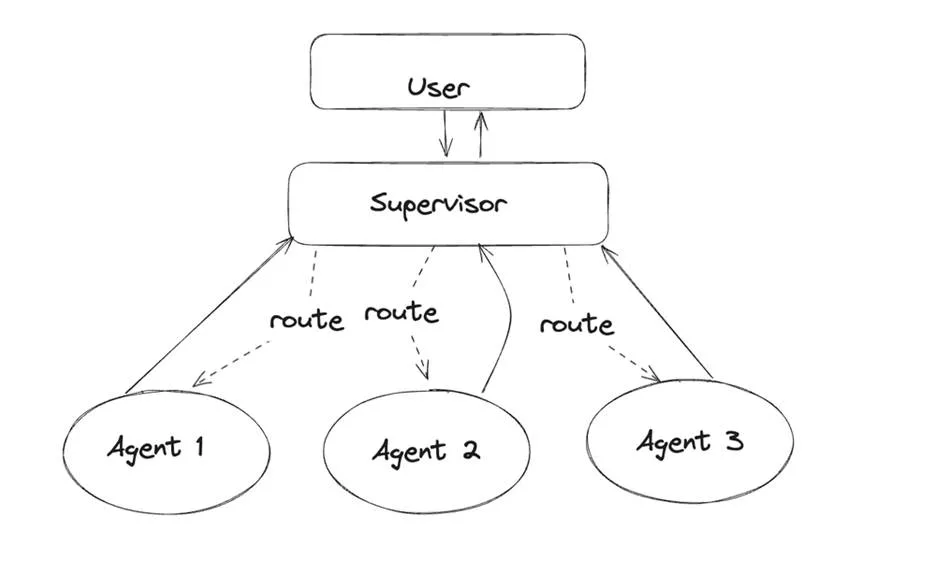

What multi-agent collaboration really means?

Think about a service desk. One person triages. Another investigates. A third fixes the issue. The last one confirms the fix and writes a note to the customer. Multi-agent collaboration follows the same idea.

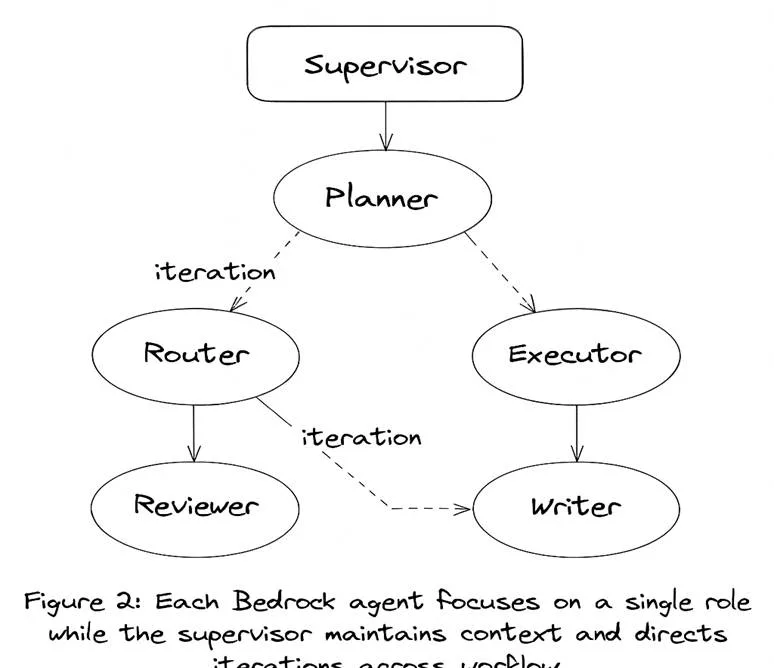

In practice, you split the work into focused roles:

- A Planner turns a request into tasks.

- A Router assigns tasks by type or skill.

- A Researcher gathers facts from approved sources.

- An Executor calls tools and APIs.

- A Reviewer checks policy and quality.

- A Writer prepares the final package.

Short messages keep everyone in sync. Instead of passing long paragraphs between agents, pass compact, typed events that carry only what the next agent needs.

Why this works?

Specialization creates predictable outputs. Failures are contained. Steps can run in parallel. Audits are simple because each step has a clear owner and a traceable result. These properties are the reason multi-agent workflows with AWS Bedrock are practical in enterprises, not just demos.

Collaboration at a glance

The role of Bedrock in coordination

Amazon Bedrock gives you a consistent way to run agents, select models, apply guardrails, query knowledge, and call tools. Treat Bedrock as the “brains” for each role and keep everything else thin.

How to map roles to Bedrock?

- Agents for Bedrock host each role with function calling and tool use. Give every agent a very small tool set. Fewer tools, better accuracy.

- Knowledge Bases for Bedrock serve curated content with filters for freshness and ownership. Capture citations so the Reviewer can verify claims.

- Guardrails apply policy, PII controls, and safety checks. Scope them per role instead of setting one global rule.

- Prompt versions live in storage and carry change history. This allows safe rollbacks when outputs drift.

Model choices

Use a fast, cost-efficient model for the Router. Use a higher-capacity model for planning and writing. Keep token budgets per role, not per request. That makes cost visible and easy to tune.

How do the Bedrock agents fit together?

This loop is iterative. The supervisor may request a revision when the Reviewer flags an issue. The agents keep talking through compact messages, not prose dumps. That single choice keeps systems stable and easier to test.

Use event triggers to run the show

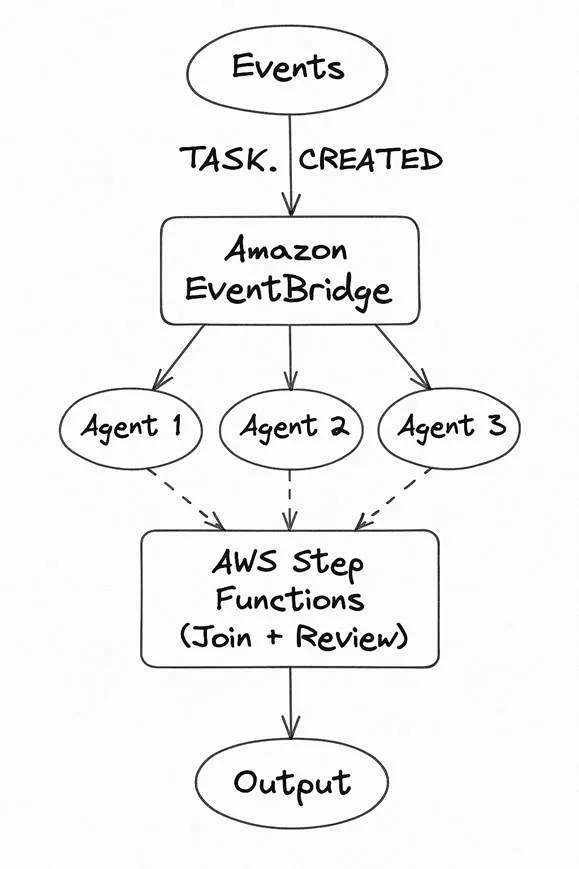

Multiple agents raise a timing question. Who acts next, and when? The clean answer is events. Let small, typed events drive the flow and let agents subscribe to what they care about.

Core idea

- A request arrives and becomes REQUEST.CREATED.

- The Planner consumes that event and emits PLAN.CREATED.

- Router and Executor subscribe to PLAN.CREATED, run in parallel, and emit TASK.DONE.

- The Reviewer waits for the required signals. After checks pass, it emits REVIEW.DONE.

- The Writer creates the final output and emits PACKAGE.READY.

Event triggers keep everything loosely coupled while preserving order where needed. This pattern also fits the cloud well because each step can scale on its own.

Event-driven orchestration

This is the sweet spot between simple choreography and strict control. Event-driven orchestration with EventBridge rules handles routing and filters, while Step Functions handle joins, compensation, and timeouts. The result is reliable AI orchestration that remains easy to extend.

Reference architecture you can ship

Control plane

Use Amazon EventBridge as the event bus. Keep event types clear and versioned. Use AWS Step Functions where ordering or joins matter. Lambda adapters connect events to Bedrock calls. CloudWatch and X-Ray provide metrics and traces.

Data and AI plane

Bedrock hosts the agents and the knowledge layer. DynamoDB stores correlation IDs, state, and idempotency keys. S3 stores artifacts and prompt versions. API Gateway exposes a clean edge for clients and partners. This layout gives you clean AWS integration with upstream and downstream systems.

Event shapes

Keep payloads compact:

{

"type": "TASK.DONE",

"version": "1.0",

"correlation_id": "a1b2c3",

"idempotency_key": "task-42-attempt-1",

"agent": "researcher",

"payload": {

"task_id": "42",

"facts": [

{"source": "kb://pricing/2025-Q4", "key": "SKU-123", "value": "Support included"}

]

}

}

Small, typed messages travel further with fewer surprises.

A concrete use case: automated RFP triage

Let’s walk through an end-to-end flow an enterprise team might run.

- A PDF arrives in an S3 inbox and posts RFP.CREATED.

- The Planner reads the summary and creates a work plan.

- The Router assigns tasks to Researcher and Executor.

- The Researcher pulls pricing and reference wins from a Bedrock knowledge base.

- The Executor calls internal APIs to create a solution outline.

- The Reviewer checks legal clauses, risk, and pricing rules.

- The Writer composes a first draft with citations and open items.

Every step emits events the next step understands. You can replay the entire run by re-posting the same event sequence. That makes audits simple and short.

This approach illustrates why multi-agent workflows with AWS Bedrock fit high-stakes content. Each role is narrow. Each output is traceable. Edits remain localized.

Signature practice: signals over prose

Most failing systems pass paragraphs between agents. The next agent guesses what the last one meant. Errors pile up.

Adopt a strict “signals over prose” contract:

- Each agent returns a small JSON object that follows a named schema and version.

- The adapter validates against JSON Schema.

- Only valid outputs produce new events.

- If validation fails, route to a small “fixup” prompt or a human review queue.

This single habit raises accuracy and cuts cost. It is the most useful USP you can add to multi-agent workflows with AWS Bedrock.

Build plan you can finish in a week

Day 1

Pick one workflow with clear value, like RFP triage or sales quote assembly. Define three events only: CREATED, TASK.DONE, READY.

Day 2

Stand up EventBridge, two rules, and a dead-letter queue. Create a DynamoDB table with correlation_id and TTL.

Day 3

Create two Bedrock agents: Router and Writer. Keep prompts short. Add one tool each.

Day 4

Add a knowledge base with 20 to 50 clean documents. Tag them with freshness and owner. Wire the Researcher.

Day 5

Introduce Step Functions for the join between Researcher and Executor. Add timeouts and retries.

Day 6

Add the Reviewer with policy checks and a simple approval step.

Day 7

Run a game day. Break a tool, bump an event version, and hit rate limits. Fix what fails. Now you have a strong baseline.

Observability and safety from day one

- Metrics: log latency_ms, tokens_in, tokens_out, and cost_usd per role.

- Tracing: attach correlation_id to every span.

- Dashboards: group by event type and role to find hotspots.

- Security: scope IAM to the minimum set for each agent. Store prompts and schemas in S3 with versioning and encryption.

- Data controls: set Guardrails per agent. Use redaction for sensitive fields.

These steps pay off the first time something goes wrong.

Cost and latency playbook

- Use smaller models for routing and classification.

- Cache retrieval results per correlation so repeated steps are cheap.

- Cap tokens per agent. Alert on cost per correlation when it crosses a threshold.

- Batch reviews when possible to reduce token churn.

- Prefer short prompts with concrete examples. They are cheaper and more stable.

This is where events help again. If a step is expensive, you can introduce a queue, throttle it, or move it to a different region without touching the rest of the system.

Pitfalls and how to avoid them

- Prompt drift: outputs change over time. Keep golden tests for each role and fail fast.

- Tool drift: an API changed without notice. Version your tool schemas and validate on every call.

- Event storms: a rule fans out too far. Use quotas, SQS buffers, and backpressure.

- Stale knowledge: retrieval returns old facts. Tag content with freshness and owner, then filter at query time.

- Invisible failures: missing metrics or traces. Add them before you scale.

Fix these early and the rest of the build stays calm.

Where does this fit in your stack?

The pattern plugs into CRMs, ticketing tools, document stores, and data platforms with little friction. Publishing event schemas creates clean AWS integration across teams and partners. As adoption grows, split event namespaces by domain and keep version policies strict.

When you need to branch into new use cases, keep the same backbone. Add roles one by one. Reuse schemas. Extend your dashboards. This is how multi-agent workflows with AWS Bedrock mature from one pilot to a repeatable platform.

Closing thoughts

Real impact comes from small, reliable parts working together. Bedrock gives you the agent layer. Events give you timing and control. Put them together and you get a system that plans, acts, and checks its own work. Start with one use case. Define tiny events. Keep prompts short. Validate every output. With these habits, multi-agent workflows with AWS Bedrock become a dependable pattern you can run at scale.