Generative AI keeps showing new ways to reshape work, but turning those ideas into real tools can feel overwhelming.

Companies need clear, practical methods that bring AI workflows with AWS Bedrock into production. That means avoiding complex infrastructure, picking the right models fast, and scaling without overpaying.

This blog walks through how exactly teams set up AI workflows with AWS Bedrock, pick from the right foundation models, and build scalable AI systems that work in the real world—powered by cloud for AI infrastructure that ensures performance and scalability.

What is AWS Bedrock?

AWS Bedrock opens the door to AI workflows with AWS Bedrock by giving direct access to major foundation models like Titan, Claude, and more—all via one API. There’s no need to manage servers. It’s meant to help teams build scalable AI tools quickly and efficiently.

What Makes AWS Bedrock Worth Considering?

AWS Bedrock is designed for teams that want to move fast without managing backend complexity. Its biggest strengths come from how much it simplifies day-to-day AI development.

Here’s what sets it apart:

Direct access to top-tier foundation models

You can run models like Claude 3 (Anthropic), Llama 2 (Meta), Titan (Amazon), Mistral 7B (Mistral AI), Stable Diffusion (Stability AI), and Command R (Cohere) — all from a single API, without setting up infrastructure.

Supports scalable AI by default

Workloads automatically scale based on demand. Whether you’re handling a few prompts or thousands, Bedrock allocates compute in the background, so there’s no manual capacity planning.

Multiple usage modes for flexibility

Choose from:

- On-Demand Inference – Pay per request (tokens or images), suited for real-time applications.

- Batch Inference – Upload large datasets for cost-efficient processing.

- Provisioned Throughput – Reserve model capacity for predictable high-usage needs.

- Built-in operational controls

Each request runs within a secure AWS environment with logging, KMS integration for encryption, VPC support, and fine-grained IAM permissions. You get observability and compliance without extra setup.

Which Foundation Models Can You Use—And What Are They Good For?

Let’s make this simple. Foundation models are large, pre-trained models built to handle different tasks like answering questions, writing text, generating images, summarizing content, or even combining multiple types of input (like text + images). With AWS Bedrock, you get access to a solid lineup — and you don’t need to worry about infrastructure or switching between tools.

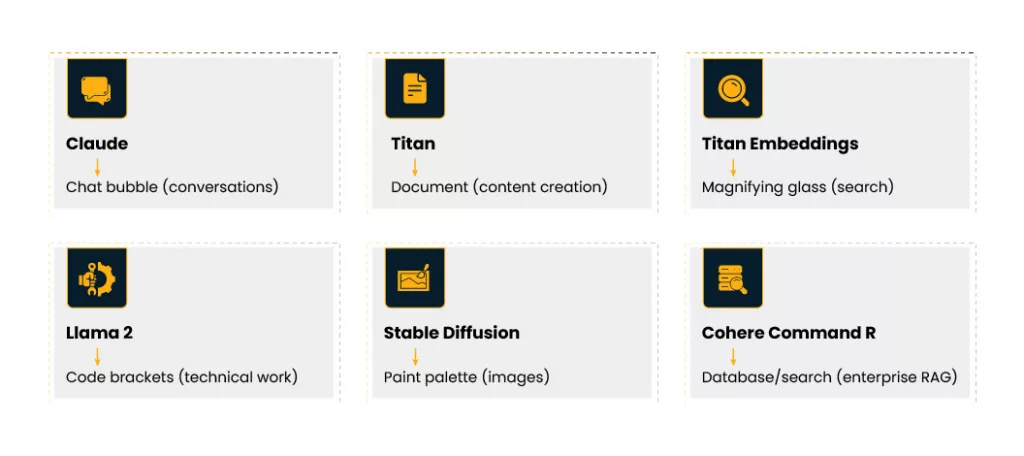

Here are a few well-known models available on Bedrock and what they’re typically used for:

Claude 3 (Anthropic)

Great for natural conversations, customer support, reasoning, and summarization. Known for being accurate and helpful in business scenarios.

Amazon Titan Text

Performs well in content generation, rewriting, and prompt-based document creation. It’s optimized for fast processing and privacy-friendly usage within AWS.

Amazon Titan Embeddings

Often used for search, ranking, and classification workflows. Pairs well with Retrieval-Augmented Generation (RAG) applications.

Meta Llama 2 (70B)

An open-weight model, which is good for coding, logic-based tasks, and long-form content. Supports more technical use cases.

Mistral 7B and Mixtral 8x7B

Known for low-latency performance, often used in cost-sensitive environments that still need smart outputs.

Stable Diffusion XL (Stability AI)

Designed for image generation based on detailed prompts. Useful for creative tasks, product visuals, and design automation.

Cohere Command R+

A strong model for retrieval-augmented generation and enterprise search use cases.

Claude Haiku and Sonnet

Lighter and faster versions of Claude 3. Ideal for chatbots and real-time use where low latency matters.

You can switch between these models without rebuilding your app from scratch. That’s one of the key strengths of AI workflows with AWS Bedrock — you get options, and you can try what works best for your task without being locked into one model.

How Does Bedrock Work with Other AWS Tools?

Bedrock connects smoothly with AWS services to complete end-to-end processes:

| Tool | Purpose |

| Amazon S3 | Store and fetch prompts, outputs, and data |

| AWS Lambda | Trigger logic, pre-process inputs |

| API Gateway | Expose Bedrock-powered APIs |

| SageMaker | Fine-tune or compare models |

You build a whole workflow that grabs input, runs AI workflows with AWS Bedrock, and returns results—with no separate infra to manage.

What Are the Deployment Options for Running at Scale?

Running models efficiently is just as important as choosing the right one. With AWS Bedrock, you’re not locked into a single way of operating. It supports multiple deployment paths, so you can pick what fits your workload and cost model.

Here are the three core options:

On-Demand Inference

This is the most flexible choice. You pay based on how much you use — usually by token or image generation. It’s ideal for applications with unpredictable traffic or for early-stage experimentation. You don’t have to commit to any long-term resource allocation, which makes it cost-effective for teams testing out ideas or running occasional AI features.

Batch Inference

If you need to process large volumes of inputs — like generating product descriptions in bulk or summarizing documents — batch mode is the better fit. You submit a structured file (usually JSON or CSV) with prompts and get the output file once processing is done. According to AWS, batch inference can cost up to 50% less than on-demand for supported models. It’s perfect for scheduled jobs that don’t need real-time results.

Provisioned Throughput

For constant, high-volume applications — like live chatbots or AI-driven search — provisioned throughput lets you reserve capacity ahead of time. This guarantees consistent speed and removes the need to scale reactively. You pay a fixed hourly rate, but the predictability is worth it for many production systems.

These options give teams the flexibility to build scalable AI workflows that match their product needs and budget.

Real-World Example: Smarter Search with AWS Bedrock

A global digital asset platform handling over 175 million files recently improved its content search system using AI workflows with AWS Bedrock. The team integrated Amazon Titan Multimodal Embeddings to enable visual and text-based search across massive libraries.

Here’s how it works:

- When users enter a query (text or image), the system converts it into a vector.

- It then compares that vector with pre-processed image vectors to find relevant matches.

- Results are based on both visual and contextual similarity.

The new setup led to measurable gains:

- Search time dropped by 75%, helping teams find assets faster

- 50% more relevant options appeared per search, improving discoverability

The API-based setup in Bedrock made it quick to test and easy to scale, with no extra infrastructure overhead. Since the model runs inside the customer’s secure AWS environment, data stays private and isn’t used to train the model.

This is a clear example of how scalable AI with Bedrock can solve specific, high-volume problems without complexity.

What If Your Team Doesn’t Have AI Expertise?

Lack of deep AI skills slows many teams. Bedrock helps here too:

- Tools from AWS GenAI like CodeWhisperer and Bedrock Studio simplify building workflows

- Visual workflows using Flows let you drag-and-drop prompts, models, data sources, and logic

- Safe defaults, guardrails, and pre-set flows make complex tasks readable and manageable

- If the use case is complex or high stakes, speaking with a solution architect or AWS partner can speed things up. Many providers offer short discovery sessions or implementation help.

These tools lower the barrier so that AI workflows with AWS Bedrock feel accessible, not intimidating.

What to Do Next?

If you want to see a live demo before jumping in, our webinar offers that chance. Speakers break down AI workflows with AWS Bedrock, show models in action, and run an end-to-end example. It’s a low-commitment way to learn what’s possible. The talk gives you the confidence to try building scalable AI systems on your own.