Your team can ship a proof-of-concept agent in a week. Operating it for a year is the hard part.

Agent teams soon learn that the tricky work sits after the demo. Things like grounding, tool orchestration, safety checks, monitoring, and ongoing updates. Costs creep in. So does risk. That is why many teams consider Agentic AI as a Service.

Instead of assembling every piece in house, you subscribe to a managed stack that handles the messy plumbing while your team focuses on business logic and outcomes.

This post gives you a clear decision path. First, assess your internal capabilities. Next, weigh custom builds against managed services with real trade offs. Then review specific situations where a service model wins.

What “agentic” really means in practice?

Agent systems do more than answer questions. They perceive state, decide next steps, call tools, check results, and try again when needed. They need:

- Planning and decomposition

- Tool and API calling with retries

- Memory and context management

- Policy, safety, and audit trails

- Observation of outcomes and feedback loops

- Runbooks for failure and escalation

Agentic AI as a Service packages these building blocks. You get opinionated defaults, service guarantees, and governance. You still design goals, tools, and guardrails. You stop reinventing scaffolding.

Step 1: Evaluate internal capabilities

Start with a candid score. If your team is green in more than two rows, plan for a service approach first.

Agent Readiness Scorecard

| Area | Evidence you have it | If missing, prefer service |

| Tooling discipline | Stable internal APIs, versioned SDKs, contract tests | Vendor handles connectors, throttling, backoff |

| Safety and policy | Red team playbooks, PII controls, trace logs | Built in policy engine, masking, and audit trails |

| Observability | Live traces, cost per task, failure heatmaps | Prebuilt runs, spans, and cost monitors |

| Reliability | On call, SLOs, runbooks | Managed runtime with error budgets and autoscaling |

| Data privacy | Clear data zones, KMS, DLP | Provider with regional isolation and keys you control |

| MLOps | Prompt/version registry, evals, rollbacks | Hosted registries, eval suites, canary tooling |

| Procurement speed | Fast vendor onboarding | Start managed, revisit build later |

If you are already excellent across these areas, building can be a good fit. If not, a service model cuts risk and time.

Step 2: Pros and cons of custom versus managed solutions

Teams often frame this as build vs buy AI. That is a useful lens but keep it grounded in operations, not just license cost.

Where do custom frameworks shine?

- You need non standard control loops or domain specific planners.

- You must run on strict internal networks with bespoke compliance.

- You want to tune every stage of the stack.

- You own a platform team that treats agents as long term core software.

Where do managed services shine?

- Faster path from design to production.

- Baked in governance and audit.

- Operational toil handled by the provider.

- Better integrations with AI platforms you already use.

Detailed comparison

| Dimension | Custom framework | Managed service |

| Time to first production | Months for infra, safety, and ops | Weeks with prebuilt runtimes |

| Operating effort | High. You maintain agents, evaluators, routing, cost controls | Lower. Vendor manages runtime, scaling, and updates |

| Compliance | You tailor controls but carry the burden | Vendor supplies controls and attestations |

| Flexibility | Maximum. Anything is possible if you build it | High within the product’s model and APIs |

| Vendor risk | None, you own it | Must vet SLAs and exit paths |

| Total cost over 12 months | Looks lower early, rises with toil | Predictable spend tied to usage and seats |

A key point. Modern services are built as cloud-native AI stacks.. That means autoscaling, isolation, observability, and cost controls are part of the base. Recreating that correctly is non trivial.

Also consider your broader tooling. If your company has standardized on specific AI platforms, a service that integrates cleanly with those tools reduces friction across teams.

Cost thinking that does not fool you

Spreadsheet math can hide the real price of “free” open source or internal code.

- People time: Architect, MLOps, SRE, security, and product support.

- Change tax: Models, APIs, and policies shift often. You will refit code.

- Ops surface: Incidents, quota issues, and vendor changes.

- Governance: Reviews, audits, and approvals slow launches.

A simple proxy helps:

Year-1 TCO = Build hours × blended rate + incident hours × on-call rate + cloud/runtime spend + audit/compliance effortIf Year-1 TCO is within 20 percent of a service subscription with the same scope, prefer the service. Your team’s focus is the tie breaker.

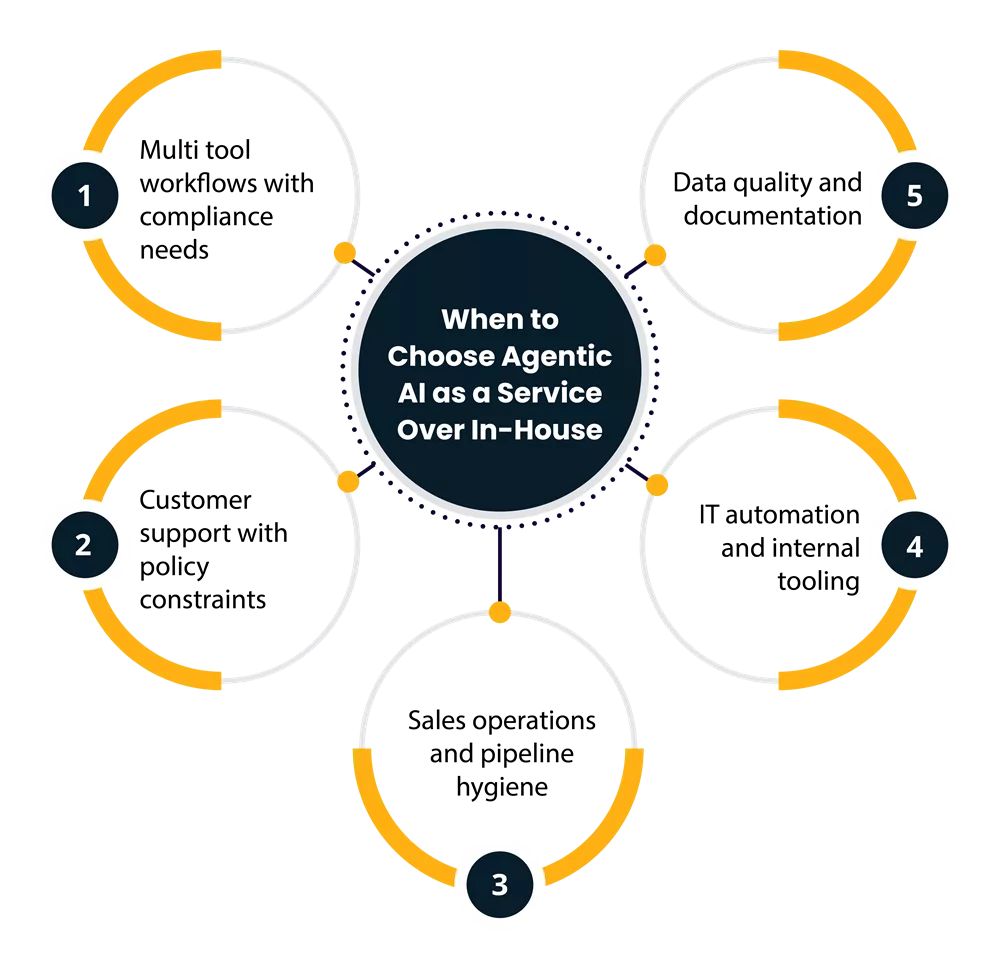

Step 3: Use cases best suited for a service model

Below are patterns where Agentic AI as a Service tends to win early. Each case includes why the service model helps and what to watch.

1) Multi tool workflows with compliance needs

Think onboarding, claims handling, KYC flows, or order corrections.

- Why service helps: Orchestration, retries, and audit logs are built in.

- Watch for: Field level masking and regional processing.

2) Customer support with policy constraints

Agents that draft replies, issue refunds, or schedule follow ups.

- Why service helps: Guardrails, approval steps, and human in the loop.

- Watch for: Integration with CRM, ticketing, and identity.

3) Sales operations and pipeline hygiene

Enrichment, meeting prep, note cleanup, and next steps.

- Why service helps: Connectors to calendars, email, and CRMs.

- Watch for: Quiet cost drift from poorly scoped tasks.

4) IT automation and internal tooling

Runbooks, diagnostics, access requests, and environment checks.

- Why service helps: Secure tool calling and role aware actions.

- Watch for: Least privilege and audit trails.

5) Data quality and documentation

Glossaries, lineage checks, doc generation, and schema diffs.

- Why service helps: Repeatable agents with scheduled runs and alerts.

- Watch for: Version control for prompts and templates.

These patterns benefit from shared building blocks. You need fast iteration and reliable controls more than niche algorithms. That lines up with Agentic AI as a Service.

A practical decision guide

Use this short rubric during solution design. Score each item 0 to 2. Add them up.

| Question | 0 points | 1 point | 2 points |

| How unique is the agent logic | Common pattern | Some domain nuance | Novel control loops |

| Compliance strictness | Standard industry controls | Extra reporting | Heavy regulatory |

| Integration breadth | 1 to 2 internal tools | 3 to 5 systems | 6 plus systems |

| Time pressure | No deadline | Quarter | Month |

| Ops capacity | Strong SRE and MLOps | Partial | Thin |

Guidance:

- Score 0 to 4: Go with the service.

- Score 5 to 7: Start with the service, revisit build later.

- Score 8 to 10: Build if this is core IP and you have a runway.

This is build vs buy AI without hand waving. It ties to operations and risk, not just license cost.

Architecture notes that save rework

Even if you start with a provider, design clean seams. It keeps options open later.

- Boundary around tools: Keep business APIs behind a thin service. Agents call that boundary. You can swap the agent runtime without rewriting business logic.

- Prompt and policy versioning: Store versions in your own repo or registry. Include references in every run.

- Data zones: Define which data can leave your VPC and which cannot. Many services offer private links and KMS integration for this.

- Metrics that matter: Task success rate, intervention rate, cost per successful task, and mean time to resolve. Wire these first.

- Model agility: Choose providers that support multiple models or your own endpoints. This avoids lock in later.

This is where cloud-native AI thinking helps. Treat agents like microservices. Isolate, observe, and roll forward safely.

What to demand from a provider?

If you shortlist vendors of Agentic AI as a Service, validate the following:

- Clear run traces with tool calls, inputs, and outputs

- Policy engine for allow and deny with templated rules

- Role and environment separation for dev, staging, and prod

- Canary deployments, rollbacks, and traffic shaping

- Strong data isolation and customer managed keys

- Native connectors to your AI platforms

- Evals tied to tasks, not just prompts

- Pricing that maps to your unit of value

Ask for a short pilot with success metrics. Keep it concrete.

Common failure modes and how to avoid them

- Vague goals: Define the task, the success signal, and the escalation path.

- One giant agent: Start small. Compose several agents with clear handoffs.

- No human in the loop: Add checkpoints where harm or cost is possible.

- Ignoring cost: Track cost per successful task from day one.

- Static prompts: Schedule evaluations and update prompts on a cadence.

Most of these failures are process issues. A good service helps by making the right way the easy way.

A brief table you can share with leadership

| Statement | What it means | Decision hint |

| “We need results this quarter.” | Delivery speed is critical | Prefer service |

| “Audit will review this monthly.” | Strong governance required | Prefer service |

| “This is our core product.” | Deep control is strategic | Consider custom |

| “We have a small platform team.” | Limited ops capacity | Prefer service |

| “We must run in region.” | Data residency is strict | Service that supports regional isolation, or custom if not available |

Bringing it together

Use a service when the job is common, regulated, or time bound. Build when the agent logic is your edge and your platform team is ready for the load. The middle path is common. Start with Agentic AI as a Service for quick, safe wins. Stabilize operations and learn. If the agent becomes strategic IP, migrate critical pieces to your own framework on your timeline.

Two final reminders:

- Keep your interfaces clean so you can move between providers and internal code with less friction.

- Treat agents as living systems. Measure, review, and improve on a fixed cadence.

Teams that follow this path avoid long detours and cut risk without stalling progress. That is the real benefit of choosing wisely between custom and managed approaches in build vs buy AI decisions. When your context points to a managed stack, pick a provider that fits your governance and integration needs. When your context points to custom, invest in observability and safety first.

Agentic AI as a Service is not a shortcut. It is a practical way to shift your attention to the work that moves the needle. If you keep score with the rubrics above, your choice will be clear more often than not.