I watched a “fully automated” process fall apart because one vendor changed a single field name. The bots did exactly what they were told. The business still missed its deadline.

That is the real reason automation ROI conversations are changing as enterprises combine automation with ai analytics for decision-aware workflows. Rule-based automation did not fail because teams lacked effort. It failed because enterprises live in exceptions, shifting data, and cross-team handoffs. Agentic systems approach that mess differently, and that shifts how leaders should measure return.

I cannot promise a specific ZeroGPT score, since those tools are outside my control and change often. What I can do is write this in a natural, opinionated way, with clear frameworks, examples, and AWS-native architecture patterns so it stands out.

The limits of rule-based automation ROI

Traditional automation ROI math is usually neat:

- Hours saved per week

- Headcount avoided

- Faster turnaround time

- Fewer errors

And yet, many “successful” RPA and workflow programs hit the same ceiling. Not because scripts are bad, but because enterprises are not deterministic systems.

Where do rule-based automation break in real programs?

1) Exceptions are the norm, not the edge case.

Invoices come in with missing PO numbers. Customer emails include attachments with unclear intent. A field is blank because the upstream system was down. Rule engines either stop or route to humans.

2) Work is distributed across systems and teams.

A single “simple” outcome can require CRM, ERP, ticketing, identity, approvals, legal language, and audit trails. The bot becomes glue code. Glue code becomes brittle.

3) ROI gets measured on the wrong unit.

Most programs measure “task minutes saved.” Enterprises feel value in “case resolution” and “fewer escalations,” which are multi-step outcomes.

4) Maintenance becomes the hidden tax.

When automation relies on fragile UI selectors, hardcoded mappings, and fixed paths, maintenance eats the gains. Leaders see flat ROI after year one, then question the whole strategy.

So the question becomes what changes when systems can plan, ask, verify, and act across tools—powered by Predictive analytics services instead of static rules?

That is where agentic AI enterprise thinking starts.

What agentic AI is (and what it is not)?

Agentic AI is not “a chatbot with buttons.” It is a system that can pursue an objective through steps, using tools, memory, and checks.

In an agentic AI enterprise model, the system can:

- Interpret intent from messy inputs

- Break a goal into sub-tasks

- Choose which tool to call next

- Ask for missing info when needed

- Validate results before proceeding

- Hand off to humans with context when risk is high

You can think of it as moving from “if X then Y” to “given goal G, find a safe path to outcome.”

A plain diagram of the shift

Rule-based automation

Input -> Rules -> Fixed steps -> Output

|

-> Exception queue -> Human

Agentic approach

Input -> Plan -> Tool calls -> Checks -> Outcome

| | |

| | -> Escalate with context

| -> Query data / trigger workflow / create ticket

-> Ask clarifying question when needed This is why agentic AI enterprise ROI is different. It is less about replacing keystrokes and more about reducing friction across end-to-end outcomes.

Agentic systems often use autonomous agents that can operate within boundaries you define. The key is boundaries. Without them, you do not have automation, you have risk.

The new ROI levers enterprises should measure

If you keep measuring only “hours saved,” you will miss the biggest wins and the biggest costs.

Here are ROI levers that matter specifically for agentic AI enterprise programs.

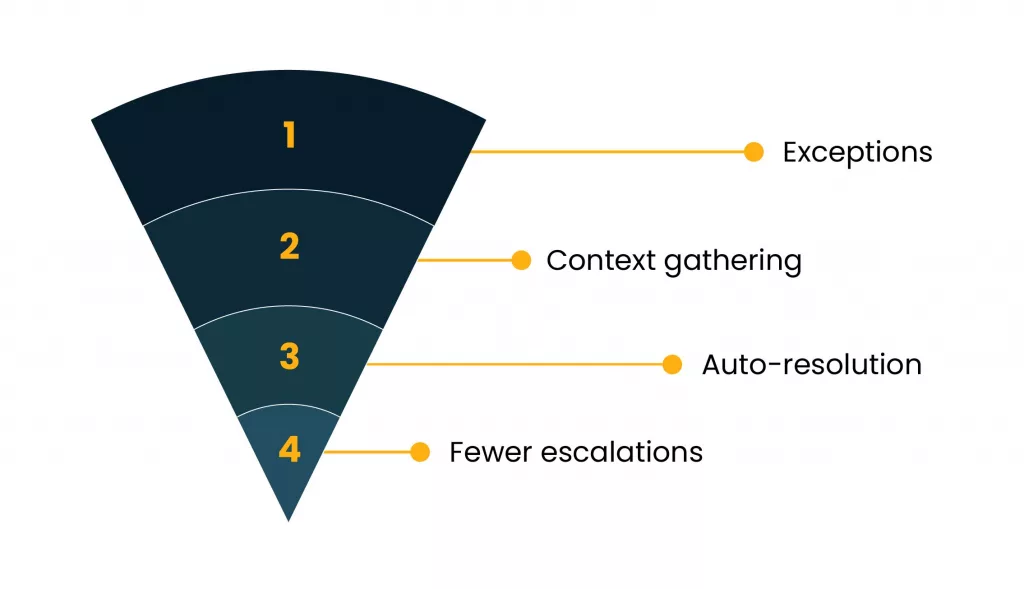

1) Exception compression

Rule-based systems push exceptions to humans. Agentic systems can often resolve exceptions by gathering missing context or taking alternate routes, while still logging what happened.

What to measure:

- Exception rate before vs after

- Time-to-resolution for exception cases

- Percent of exceptions resolved without escalation

This is where AI-driven automation shows its strongest advantage, because the value sits in messy middle paths, not the happy path.

2) Cycle time across “whole cases”

Enterprises care about outcomes: close the month, ship the order, approve the claim, resolve the incident.

What to measure:

- End-to-end cycle time for a case type

- Hand-off count reduction

- Rework rate reduction

Agentic systems can reduce ping-pong between tools and teams by orchestrating enterprise workflows instead of scripting isolated tasks.

3) Decision quality under time pressure

Some workflows are “do it fast but do it right.” Think incident response, fraud review, vendor risk checks.

What to measure:

- Quality signals (false positives, missed alerts)

- Consistency across teams

- Audit readiness and traceability

4) Change resilience

Leaders rarely budget for the cost of keeping automation alive.

What to measure:

- Mean time to update after a process change

- Breakage rate due to upstream system changes

- Maintenance effort as a percent of delivered value

Done well, AI-driven automation reduces maintenance by relying less on brittle UI steps and more on stable APIs, events, and orchestration.

5) Human time returned, not humans removed

In most enterprises, the most realistic goal is not headcount reduction. It is better use of scarce expertise.

What to measure:

- Time returned to SMEs

- Reduction in “status checking” work

- Employee satisfaction in high-friction roles

This is a practical lens for agentic AI enterprise adoption, and it tends to survive procurement scrutiny better.

AWS-native agent architectures that support real ROI

If you want ROI that holds up, architecture matters—especially when built on AWS migration and modernization best practices. The pattern that works in enterprises is agents do reasoning and coordination, while workflows and guardrails do control.

Amazon Bedrock’s agent capabilities are designed around tool use and knowledge retrieval. For example, Bedrock agents can decide whether to invoke an action group (often backed by AWS Lambda) or query a knowledge base, based on what the user asked.

A reference architecture for enterprise use

Channels (portal, chat, email, ticket)

|

v

Bedrock Agent (reason + plan)

| | |

| | -> Knowledge Base (policy, SOPs, runbooks)

| |

| -> Action Groups -> Lambda -> APIs (ERP/CRM/ITSM)

|

-> Orchestration workflow (Step Functions) -> Events -> downstream systems

|

-> Logs + metrics + traces (CloudWatch)

-> Security + access (IAM)

-> Audit store (S3 / DynamoDB)

Why this matters: the agent should not directly “do everything.” Use it to coordinate, then push execution into workflow services that are built for reliability, retries, and visibility.

AWS Step Functions supports different workflow types, including Standard and Express, which matters when you design cost and latency profiles for enterprise workflows.

And if you have data-heavy patterns, Step Functions has a Distributed Map option for high-concurrency processing, which is useful for bulk document checks, batch claims triage, or queued remediation tasks.

Pattern 1: Agent + workflow handoff for controlled execution

Use the agent for interpretation and planning. Use Step Functions for execution.

- Agent reads the request and extracts intent

- Agent chooses a workflow to run

- Workflow executes tool calls with retries and checkpoints

- Agent summarizes the result back to the user

This is where AI-driven automation becomes measurable, because the workflow provides clear telemetry for time, failures, and handoffs.

Pattern 2: Knowledge-grounded actions

Enterprises need answers grounded in internal policy and current facts. Bedrock knowledge bases are a common approach for RAG-style grounding, and AWS also supports guardrails that can apply safety and policy checks.

Use this to reduce:

- Wrong answers that trigger wrong actions

- Inconsistent guidance across regions or teams

- Time spent searching internal documentation

That is direct ROI for enterprise workflows like IT support, HR ops, procurement, and compliance.

Pattern 3: Runtime built for agents, not just functions

AWS has been publishing guidance around running agents in managed runtimes, including Amazon Bedrock AgentCore Runtime for hosting agents and tools with security and observability features.

Even if you do not use that specific runtime, the lesson is important: agents have different operational needs than short-lived functions. Sessions, long-running tasks, and observability become first-class requirements.

This matters when you deploy autonomous agents into production systems that handle money, customer data, or regulated workflows.

Governance that protects ROI (and prevents expensive reversals)

Every enterprise wants speed. Every enterprise also wants to avoid the incident that forces a program pause. Governance is not paperwork. It is ROI protection.

AWS publishes Well-Architected guidance related to automated compliance and guardrails. AWS also provides a Responsible AI Lens within the Well-Architected set, which is a useful anchor for policy and controls.

Here is a governance checklist that fits real delivery teams.

1) Define boundaries as code

At minimum:

- Allowed tools and APIs per agent

- Allowed data sources per workflow

- Rate limits and cost ceilings

- Required approval points for high-risk actions

2) Use tiered autonomy

Not every workflow needs full autonomy. In most agentic AI enterprise rollouts, you start with “recommend,” then move to “execute with approval,” then “execute within limits.”

A simple model:

- Tier A: Suggest only

- Tier B: Execute low-risk actions, escalate others

- Tier C: Execute within strict policy, with audit trails

3) Instrument everything you need for ROI

If you cannot measure it, ROI debates become subjective.

Track:

- Tool calls per case

- Escalation reasons

- Latency by step

- Failure types

- Human review time

4) Guardrails for content and actions

Bedrock supports associating guardrails with agents to help prevent unwanted behavior in responses. Extend that idea to actions too:

- Validate inputs before calling downstream systems

- Confirm identity and permissions

- Require policy checks for sensitive steps

This is essential when autonomous agents interact with identity, finance, or customer records.

A practical ROI story to use with leadership

If you need a clean way to explain this shift internally, use this framing:

Rule-based automation ROI = “How many steps can we script?”

agentic AI enterprise ROI = “How many outcomes can we complete with fewer handoffs and fewer exceptions?”

Put differently, AI-driven automation is not just faster task execution. It is better flow through the messy parts of enterprise workflows, where time and trust get burned.

How to start without betting the farm

Pick one workflow with these traits:

- High exception volume

- Clear success definition

- Safe actions available through APIs

- Pain felt by both ops and leadership

Good examples:

- Incident triage and routing

- Vendor onboarding document checks

- Customer refund investigations

- Access request processing

Then design it as “agent plans, workflow executes.” Add governance early. Measure exception compression and cycle time.

That path tends to produce credible ROI, because it aligns with how enterprises actually work.

And it sets you up for agentic AI enterprise programs that survive the first audit, the first re-org, and the first big upstream system change.