Database migration plays a central role in how organizations manage growth, system reliability, and long-term data access. It involves moving structured information from one database environment to another in a way that preserves accuracy and operational continuity.

This work is rarely limited to technical execution. It affects application behavior, reporting systems, security controls, and compliance obligations. Many organizations rely on database migration services to manage this process because data movement introduces risk that cannot be corrected easily after systems go live.

Migration decisions often carry long-term consequences, which is why enterprises increasingly combine database initiatives with broader Data modernization services. A poorly structured migration can affect system performance for years. It can also introduce hidden data quality issues that appear only during operational use. These outcomes make it necessary to treat migration as a strategic initiative. Teams must understand what migration includes before selecting how it should be executed.

This need for clarity brings attention to the full scope of database migration services.

What Is the Scope of Database Migration Services?

The scope of migration extends across the entire system lifecycle. It begins before any data is moved. It continues after the new system becomes active. The data migration lifecycle includes:

- Assessment

- Preparation

- Execution

- Validation

- Post-migration support

Each phase contributes to system stability and operational reliability.

The process starts with source system analysis, a step typically led by teams providing specialized data engineering services. Teams examine table structures and field definitions. They review indexing logic and data relationships. This step identifies how data is organized and how it behaves during real workloads.

Next comes data profiling. This involves measuring record volumes and value patterns. Teams check for missing values and inconsistent formats. This information reveals how much transformation work is required.

Schema mapping follows profiling. Engineers define how each source field maps to the target system. They document transformation rules and value constraints. This step ensures compatibility between systems.

Execution includes data extraction and transformation, often supported by structured AWS database migration service tooling in cloud environments. Teams load data into the target environment. They perform test runs using controlled batches.

Validation occurs after loading. Engineers reconcile record counts and check key relationships. They review error logs and verify data integrity.

The table below shows how responsibility often aligns with each phase.

| Migration Phase | Key Activities | Primary Owner |

| Assessment | Source analysis, profiling | Data architect |

| Preparation | Schema mapping, cleansing | Engineering team |

| Execution | Data extraction, loading | Operations team |

| Validation | Reconciliation checks | QA team |

| Cutover | Final sync, access switch | Project lead |

Once this scope becomes clear, the next step is deciding how migration should be executed within operational constraints.

What Are the Main Data Migration Methodologies?

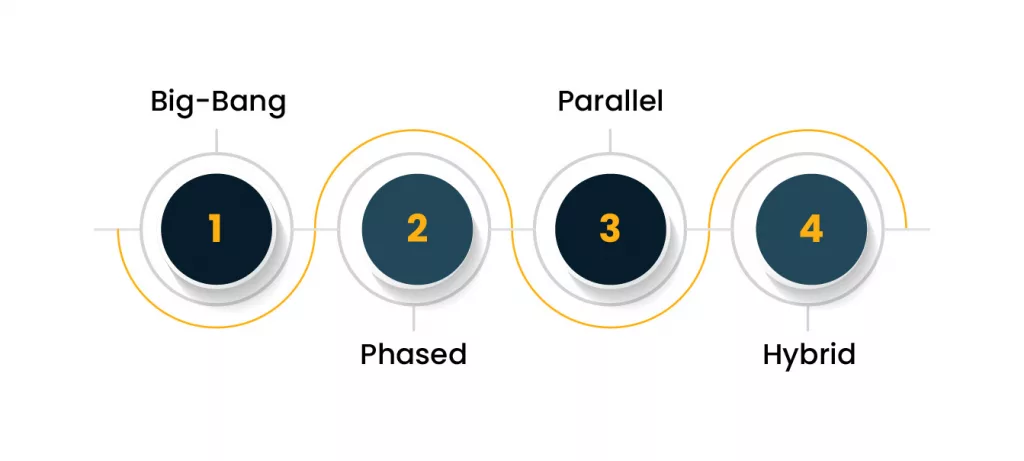

Execution depends on system size and operational tolerance. Data migration methodologies define how data moves and how systems remain active during the process.

One approach uses a big-bang method. All data moves in a single operation. This method suits small systems with low downtime impact. It requires precise coordination because rollback options are limited.

Another approach uses phased migration. Data moves in logical segments. Teams migrate one module at a time. This fits enterprise systems where departments operate independently.

Parallel migration keeps both systems active. Data synchronizes across environments. Users shift gradually to the new system. This method reduces disruption but requires constant monitoring.

Hybrid migration combines these patterns. Historical data moves in phases. Transactional data synchronizes in parallel.

Each method introduces operational considerations.

The table below shows when each method fits.

| Methodology | When It Fits | Key Risk |

| Big-bang | Small systems | Limited rollback |

| Phased | Modular systems | Dependency issues |

| Parallel | Critical systems | Data drift |

| Hybrid | Mixed workloads | Coordination effort |

Execution models shape system behavior and testing strategies. These choices lead to technical decisions around lift-and-shift and refactoring.

Should You Use Lift-and-Shift or Refactor?

This decision influences both delivery speed and long-term system quality. Data migration methodologies include direct movement and structural transformation.

Lift-and-shift moves data without altering schemas. It preserves application logic and data structure. This approach suits systems that must move quickly. It also works when infrastructure constraints force relocation.

Refactoring modifies schemas during migration. Teams redesign tables and indexes. They adjust query logic and data relationships. This approach supports performance improvements.

Operational factors shape this decision because teams need a clear view of how the system behaves in real conditions. This includes checking schema compatibility and reviewing how tightly applications depend on existing structures. It also involves evaluating testing capacity, understanding data complexity, and estimating the effort required to maintain the system after migration.

Once the approach is selected, the next decision concerns the target database platform.

How Do You Choose the Right Target Database?

Database selection depends on workload behavior, which is why many enterprises engage aws cloud consulting services to evaluate performance and risk trade-offs. Relational systems handle structured transactions. Distributed systems manage large-scale ingestion.

Cloud-managed databases reduce operational effort. They handle backups and scaling. Self-managed databases offer configuration control. They require dedicated maintenance.

Decision factors include:

- Transaction frequency

- Data growth patterns

- Reporting requirements

- Integration dependencies

- Regulatory constraints

For example, an e-commerce platform that handles payments and order processing relies on strict transactional consistency. This makes relational databases a suitable choice for preserving accuracy during high-volume operations. A media analytics system that collects clickstream or user behavior data operates at a much larger scale. It works better with distributed databases that support high-volume ingestion and flexible schemas.

Database choice directly affects migration effort because some platforms offer built-in replication and synchronization tools. Other platforms require custom transformation pipelines and manual validation logic. These technical differences influence both implementation cost and the level of operational risk involved in the migration process.

What Are the Cost and Risk Trade-Offs?

Migration cost includes engineering time and testing resources. It also includes downtime impact and post-deployment support. The second phase of the data migration lifecycle often reveals expenses that were not part of initial planning.

Risks involve data loss and service disruption. They also involve performance degradation and compliance exposure. Each risk introduces operational cost.

The table below shows how risk translates into financial impact.

| Risk Area | Cost Impact | Mitigation Action |

| Data loss | Recovery effort | Backup validation |

| Downtime | Revenue loss | Parallel testing |

| Performance issues | User complaints | Load testing |

| Compliance gaps | Audit penalties | Logging controls |

A regional healthcare provider migrated electronic patient records from an on-premise system to a cloud-based clinical platform used by outpatient clinics. During the final cutover, validation scripts failed to reconcile appointment data with patient identifiers. This caused several clinics to lose access to active records for most of the morning. As a result, treatment schedules had to be rebuilt manually and staff reverted to paper notes for ongoing consultations.

Although the technical issue was fixed later that day, the operational impact continued. Clinical teams reported delays in care delivery, and internal incident reports were raised. The compliance team was then required to submit an audit explanation to the regional health authority. The system recovered, but confidence in the migration process declined across both technical and medical staff.

Understanding these risks helps teams avoid reactive decisions, as highlighted in practical guidance on choosing the best AWS database migration strategy. This leads to structured decision frameworks.

How Should Organizations Make Migration Decisions?

Decisions should rely on operational reality. Data migration methodologies provide execution patterns. They do not replace contextual judgment.

A practical checklist includes:

- Data volume stability

- System criticality

- Compliance exposure

- Internal expertise

- Downtime tolerance

For example, a payroll system that processes salaries for thousands of employees cannot tolerate extended downtime because even short interruptions can delay payments and trigger compliance issues. In this situation, teams usually choose phased migration so critical functions remain available while data moves in controlled stages.

In contrast, a marketing analytics platform that supports internal reporting dashboards carries lower operational risk because temporary outages mainly affect insight availability rather than core business operations. In this case, teams often accept short service interruptions in exchange for faster execution.

Decision frameworks help align these technical choices with real business risk and operational impact. They also help determine when external expertise is needed to manage complexity or reduce exposure during critical transitions.

Where Database Migration Services Add Real Value

This is where database migration services make a practical difference, not through tools, but through execution discipline and risk control.

They typically contribute in the following areas:

- Pre-migration audits

Review source schemas, detect orphan records, and surface data quality gaps before transformation begins.

- Validation frameworks

Reconcile record counts, verify key relationships, and flag silent data corruption across environments.

- Controlled cutover planning

Define rollback procedures, manage synchronization windows, and coordinate access freeze periods.

- Post-migration monitoring

Track system performance and identify anomalies that only appear under production workloads.

- Compliance evidence preservation

Maintain audit logs, transformation records, and access control history for regulatory review.

- Independent governance validation

Provide objective verification so internal teams can confirm outcomes without relying on implementation staff.

- Migration documentation

Capture schema models, transformation rules, and dependency structures for future system changes.

- Operational risk visibility

Offer clear progress tracking, structured status reporting, and early risk detection.

At the End: Choosing the Right Approach Is About Long-Term System Health

Database migration shapes how systems function long after the data has moved. The choices made during migration influence data structure, system performance, and how easily teams can maintain or extend the platform. These effects usually appear gradually, through reporting issues, slow queries, or growing operational complexity.

A reliable migration follows a disciplined path. It begins with accurate assessment, continues through structured execution, and ends with detailed validation. Each stage reduces uncertainty and helps prevent hidden issues from entering production systems.

The right approach treats migration as part of system design rather than a standalone activity. It focuses on data consistency, operational continuity, and future adaptability. When migration is handled with this mindset, systems remain stable, teams trust their data, and technical changes become easier to manage over time.