“Data doesn’t wait. Neither do customers, regulators, or systems. The ability to respond as things happen is what separates fragile automation from enterprise-ready intelligence.”

For years, businesses have invested in automation that follows scripts. It worked, but only until reality shifted. Then the system broke, humans jumped in, and the promise of efficiency collapsed.

What companies need today is different: reliable AI agents that can take in live inputs, reason with context, and still deliver consistent outcomes—something modern hyperautomation frameworks increasingly support.

In this blog, I’ll walk through three pillars of making that possible: understanding real-time adaptability, building strong data ingestion and response frameworks, and ensuring reliability through rigorous testing and monitoring.

Real-Time Adaptability: What It Really Means?

Adaptability is one of those words that gets tossed around, but in practice it comes down to three things: noticing, interpreting, and acting. For an enterprise, it’s not just about reacting quickly, but reacting responsibly.

Think about a few scenarios:

- A logistics agent reroutes shipments within minutes when a highway is shut down.

- A finance agent spots a new type of fraudulent transaction and responds before losses spread.

- A customer support agent adjusts tone and suggestions as it detects frustration rising in a client’s messages.

What ties these together is real-time data processing. The agent must pull signals from different systems, structured ERP data, social media feeds, IoT devices—and make sense of them without delay, supported by strong enterprise integration workflows.

But there’s a catch. Acting fast is useless if the action isn’t grounded in context. Adaptive agents can’t just take raw signals at face value. They need to cross-check inputs with business rules, compliance requirements, and historical trends—reinforced by disciplined cloud engineering practices. Otherwise, you risk chaos: rerouted shipments that ignore cost rules, fraud flags that shut down good customers, or customer responses that sound robotic and off-brand.

So, real-time adaptability isn’t about speed alone. It’s about speed with judgment.

Data Ingestion and Response Frameworks

For adaptability to work, the plumbing has to be right. No matter how advanced the model, if the data pipelines are weak, the whole system fails—making a solid cloud data strategy essential. I’ve seen this firsthand in enterprises where agents were trained well but starved by slow or inconsistent feeds.

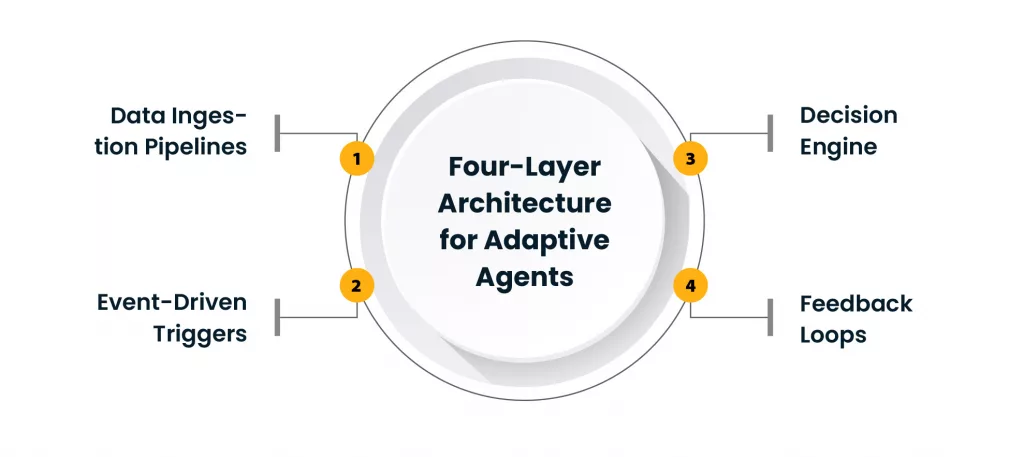

Four Layers That Matter

- Data Ingestion Pipelines

- Collect from APIs, devices, logs, and external feeds.

- Standardize formats to avoid errors downstream.

- Clean the data on the fly so bad inputs don’t corrupt decisions.

- Event-Driven Triggers

- Agents respond when something happens instead of polling every few minutes.

- This lowers latency and reduces wasted compute cycles.

- Decision Engines

- Where AI models meet business logic.

- Predictions alone are risky; enterprises need guardrails to enforce policies.

- Think of it as the difference between an intern suggesting actions and a manager approving them.

- Feedback Loops

- After each action, the outcomes are fed back into the system.

- Over time, this is how enterprise AI learns from its own history.

Visualizing the Flow

Picture a circle. The outside ring is raw data coming in. Inside that ring, pipelines clean and normalize it. At the core, a decision engine balances predictions with enterprise rules. From there, actions flow out—and then loop back in as feedback.

This layered approach ensures reliable AI agents aren’t just adaptive but anchored in the operational reality of the business.

Building Reliability Through Testing and Monitoring

Adaptability is impressive on paper, but without reliability it’s dangerous. Enterprises can’t afford agents that surprise them in production. Testing and monitoring are how trust is built.

Testing Before Deployment

- Unit Testing for Models: Check how the model behaves on expected inputs. Make sure it doesn’t produce wild outputs from minor changes.

- Integration Testing: See how the agent interacts with CRMs, ERPs, or homegrown systems. Small mismatches here can bring down entire workflows.

- Scenario Testing: Push the system with data spikes, outages, or compliance edge cases. It’s better to see failure in a controlled environment than during peak business hours.

- A/B Comparisons: Run the agent side by side with human decisions. This highlights not just accuracy gaps but also differences in reasoning.

Monitoring After Deployment

Testing is a start, not an endpoint. Once live, agents need constant oversight.

- Performance Metrics

- Latency, throughput, and accuracy are watched continuously.

- If predictions drift, alerts fire.

- Behavior Monitoring

- Spot when an agent makes choices that don’t fit business rules.

- Example: approving discounts higher than allowed or routing calls incorrectly.

- Human Dashboards

- Give domain experts visibility.

- Make it easy for them to step in when something feels off.

Industry Examples

- Healthcare: A triage agent must never miss critical symptoms. Continuous monitoring ensures reliability.

- Finance: Fraud agents need to adapt quickly to new schemes while avoiding false positives. Testing and monitoring keep the balance.

- Retail: Recommendation systems must handle sudden demand shifts but avoid suggesting products already sold out.

Each case shows why adaptability must be paired with dependability. Without both, enterprise adoption stalls.

Why Reliability and Adaptability Go Hand in Hand?

I often hear enterprises debate whether to prioritize adaptability or reliability. The answer is simple: you can’t pick one.

- Adaptability without reliability is chaos. Fast responses that can’t be trusted.

- Reliability without adaptability is stagnation. Stable outputs that quickly become irrelevant.

The strength of adaptive agents lies in balancing both. This balance doesn’t happen by chance. It comes from strong data frameworks, relentless testing, and disciplined monitoring.

Closing Thoughts

Building reliable AI agents is not just about chasing the latest AI trend. It’s about solving very real enterprise problems where change is constant and mistakes are costly.

The formula isn’t complicated, but it requires commitment:

- Solid ingestion and processing pipelines.

- Event-driven architectures to cut latency.

- Testing that mimics real-world messiness.

- Monitoring that never switches off.

Enterprises that get this right won’t just automate, they’ll create systems that act with context, adjust to reality, and stay dependable under pressure. That’s the standard modern enterprise AI should meet.